Have you ever wondered how typing a few words into an AI tool creates stunning, photorealistic images in seconds? The technology behind AI image generation is both fascinating and complex, involving sophisticated neural networks that understand the relationship between human language and visual concepts.

In 2025, AI image generation has reached remarkable heights. What seemed impossible just a few years ago is now commonplace. Tools like Midjourney Version 7, OpenAI's GPT-4o image generation, and Google's Imagen 4 are creating images so realistic they're often indistinguishable from photographs taken by professional cameras.

In this comprehensive guide, we'll pull back the curtain on AI image generation and reveal the mechanisms that power these revolutionary tools, from the fundamental concepts to the latest breakthroughs transforming the field.

Understanding Neural Networks: The Brain Behind AI Art

At the core of every AI image generator is a neural network—a computational system inspired by the human brain. These networks consist of layers of interconnected nodes (artificial neurons) that process information in increasingly complex ways.

When you type a prompt like "a sunset over mountains with purple clouds," the neural network doesn't search for existing images. Instead, it generates entirely new pixels based on patterns it learned from millions of training images. Think of it like an artist who has studied thousands of paintings and can now create original artwork in any style.

The Training Process: Teaching AI to See

Before an AI can generate images, it must undergo extensive training. Modern systems in 2025 use increasingly sophisticated training methods:

- Massive Dataset Collection: Training involves millions or even billions of images paired with descriptive text captions, covering everything from everyday objects to abstract concepts

- Pattern Recognition: The AI learns to associate words with visual features—connecting "fluffy" with soft textures, "neon" with bright glowing colors, or "vintage" with aged, nostalgic aesthetics

- Style Understanding: Networks learn artistic styles, compositional rules, lighting principles, and how visual elements relate to each other

- Continuous Refinement: Through millions of iterations and feedback loops, the AI improves its ability to match prompts with appropriate visual outputs

- Ethical Considerations: Modern training includes safeguards to prevent generating harmful content and respects copyright considerations

The Revolutionary Transformer Architecture

One of the biggest breakthroughs in AI image generation came from adopting transformer architecture—originally designed for language processing. Transformers revolutionized how AI understands context and relationships in data.

Unlike older systems that processed information sequentially, transformers use something called self-attention mechanisms. Imagine you're in a crowded room trying to listen to someone speak. Your brain automatically focuses on their voice while filtering out background noise. Self-attention works similarly—it helps the AI determine which parts of your prompt are most important and how different elements should relate to each other.

From Words to Pixels: The Generation Process Explained

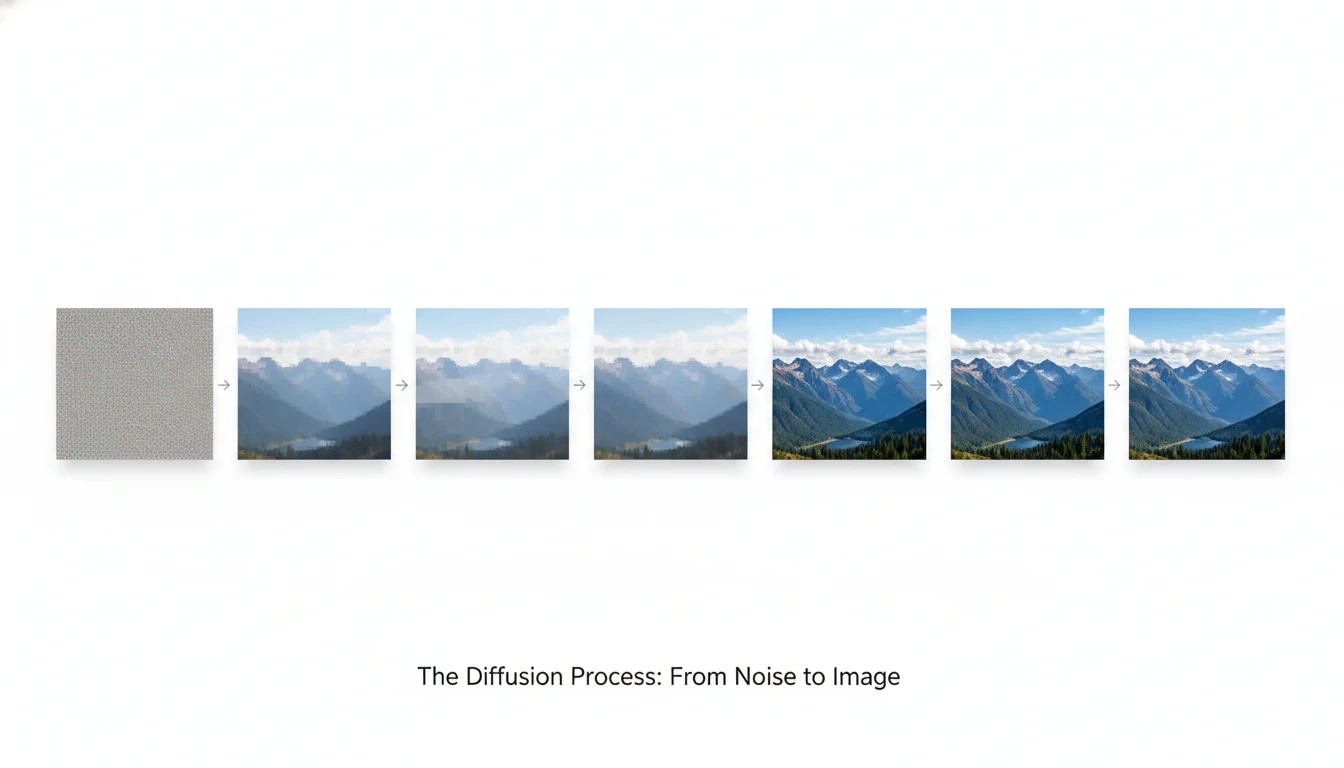

Step 3: The Diffusion Process

Most modern AI image generators in 2025 use diffusion models. This revolutionary approach starts with random noise—like static on an old television—and gradually refines it into a coherent image.

The process works through dozens or hundreds of steps. In each step, the AI makes tiny adjustments, removing a bit of noise while adding structure and details that match your prompt. It's like a sculptor chipping away at marble, slowly revealing the intended form.

"Diffusion models learn to reverse a noise-adding process. They train by taking clear images, adding random noise, and learning how to remove that noise. During generation, they start with pure noise and apply this learned denoising process to create new images."

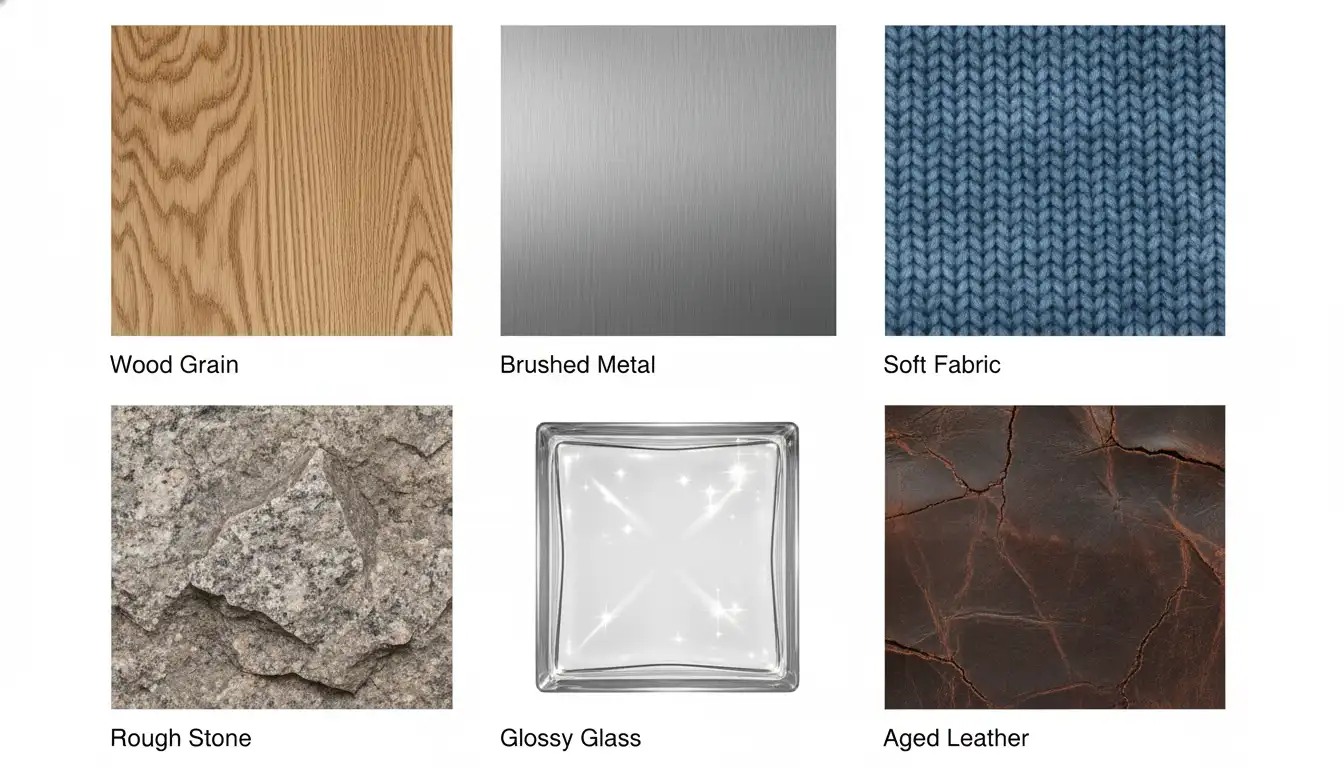

The Color and Texture Magic of 2025

One of the most impressive aspects of modern AI image generation is how it handles color and texture. Neural networks excel at creating realistic textures and understanding color theory from training data.

Advanced Techniques and Control Methods

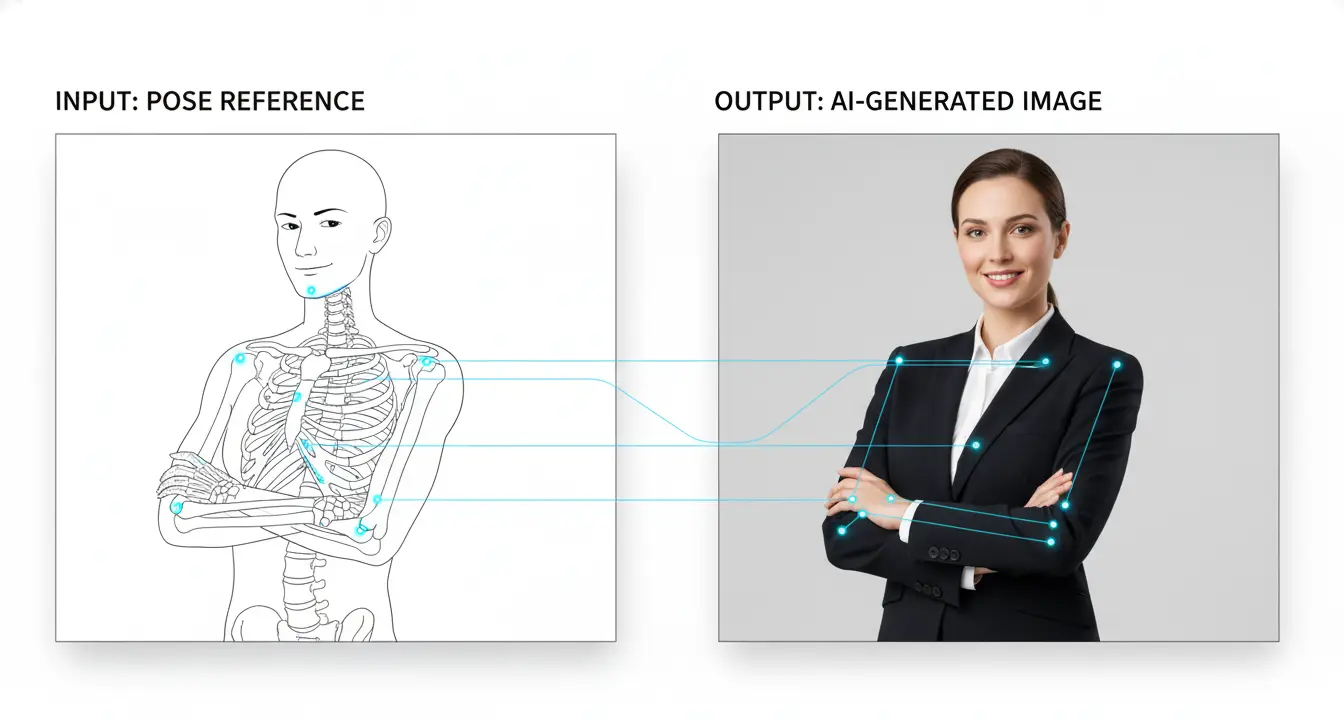

ControlNet and Conditioning

ControlNet is an external neural network that controls image generation by providing additional conditioning on top of text prompts. You can supply reference images for pose, depth, edges, or other features, giving you precise control over composition and structure.

Conclusion: The Democratization of Visual Creativity

The secret behind AI image generation lies in sophisticated neural networks, particularly transformer architectures and diffusion models, that have learned to understand the deep relationship between language and visual concepts. Through processes involving text encoding, latent space mapping, and iterative refinement, these systems transform simple text prompts into complex, beautiful images.

In 2025, we've reached a point where AI image generation is not just a technological curiosity but a practical tool used by millions daily. The combination of faster generation speeds, higher quality outputs, better control mechanisms, and improved text rendering has made these tools indispensable for creative professionals and hobbyists alike.