Imagine being able to create a perfect copy of anyone's voice from just a few minutes of audio. Or generating speech that sounds completely natural with perfect emotion and tone. That's exactly what AI voice cloning technology can do today. In this guide, we'll explore how voice cloning works, the best tools available, and how you can create realistic synthetic voices for your projects.

Voice cloning has evolved dramatically from the robotic, mechanical text-to-speech systems of the past. Today's AI-powered voice synthesis can capture the subtle nuances that make human speech unique, from the slight rasp in someone's voice to the way they emphasize certain words. Whether you're a content creator, developer, or just curious about this technology, understanding how it works opens up incredible creative possibilities.

What Is AI Voice Cloning and How Does It Work?

AI voice cloning is the process of using artificial intelligence to create a digital copy of someone's voice. Once created, this synthetic voice can say anything you want, in any emotion or tone, without the original person needing to record new audio. It's like having a voice actor who never gets tired, works instantly, and can speak in multiple languages.

The technology behind this seems like magic, but it's actually based on deep learning, a type of AI that learns patterns from examples. Think of it like this: if you showed someone thousands of examples of handwriting, they'd eventually learn to recognize and even replicate different writing styles. Voice cloning AI does the same thing with speech patterns.

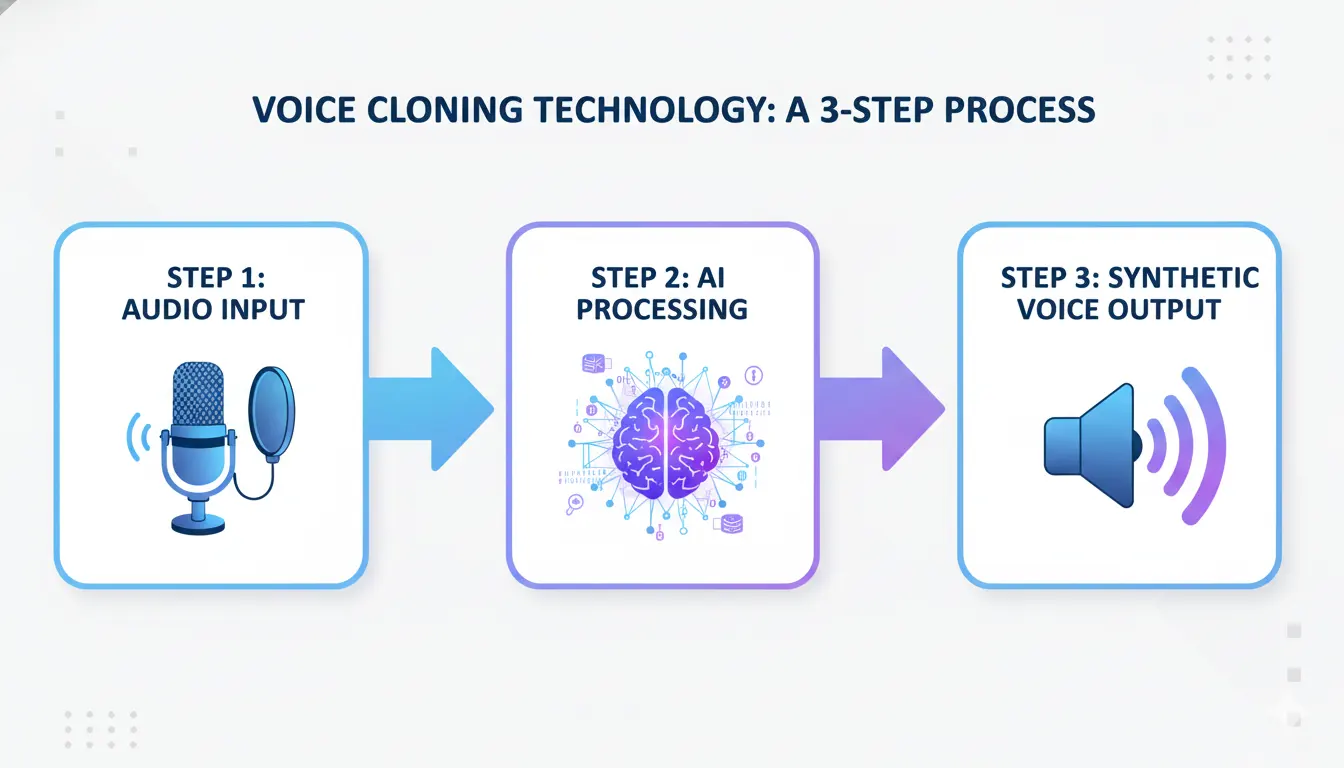

The Three Main Steps of Voice Cloning

Step 1: Voice Analysis and Recording

First, the AI needs to hear and study the target voice. Modern systems can work with as little as a few minutes of clean audio, though more audio generally produces better results. During this phase, the AI analyzes everything about how the person speaks, their pitch range, speaking rhythm, unique vocal characteristics, accent and pronunciation patterns, and even breathing patterns between words.

The quality of this initial recording matters a lot. Clear audio with minimal background noise produces much better clones than noisy or low-quality recordings. Think of it like trying to copy a painting, you'll get better results copying a high-resolution photo than a blurry, dark image.

Step 2: Model Training and Learning

This is where the AI does its heavy lifting. Using the audio samples, neural networks, computer systems modeled loosely after the human brain, learn to understand and replicate the voice's unique characteristics. The AI identifies patterns in how the person forms different sounds, learns the speaker's natural speaking rhythm and pace, understands their typical emotional range and expression, and maps out their unique vocal fingerprint.

This training process used to take days or weeks on powerful computers. Now, thanks to advances in AI technology, some systems can create usable voice clones in just minutes. The AI essentially builds a mathematical model of the voice, a blueprint it can use to generate new speech that sounds like the original speaker.

Step 3: Speech Synthesis and Generation

Once trained, the AI can generate new speech that sounds like the cloned voice saying anything you want. You simply type or paste text, and the system converts it into audio using the cloned voice. The synthesis process considers proper pronunciation of words, natural pauses and breathing, appropriate emotion and tone, and realistic intonation patterns.

The result is audio that sounds remarkably like the original person speaking, even though they never actually said those specific words. It's similar to how a skilled impressionist can capture someone's voice and mannerisms well enough to say things in their style, except the AI can do it perfectly and instantly.

Understanding Emotion and Tone in AI Voice Synthesis

What really separates modern voice AI from older text-to-speech technology is the ability to convey emotion and adjust tone. This is what makes synthetic voices sound natural rather than robotic.

Emotional Range and Expression

Today's best voice AI tools can generate speech with a wide range of emotions. You're not limited to a flat, monotone delivery. Instead, you can specify how you want the voice to sound, happy and enthusiastic for promotional content, calm and soothing for meditation or sleep content, serious and authoritative for news or educational material, sad or empathetic for emotional storytelling, or excited and energetic for entertainment content.

The AI achieves this by adjusting multiple vocal parameters simultaneously. It's not just changing one thing, it's orchestrating a complex combination of pitch variations, speaking speed, voice energy levels, emphasis patterns, and pausing rhythms to create authentic-sounding emotion.

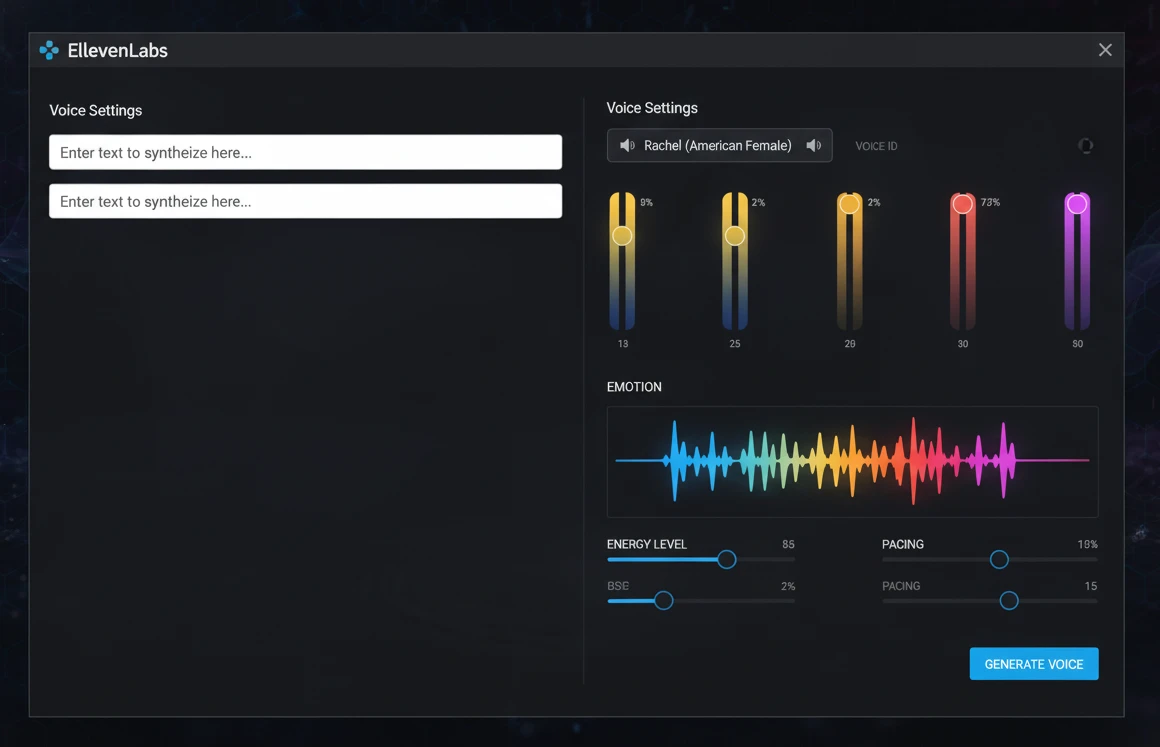

Fine-Tuning Voice Characteristics

Beyond basic emotions, advanced voice AI tools let you control specific aspects of how the voice sounds. Speaking pace can be adjusted, faster for exciting content or slower for dramatic emphasis. Energy levels can range from subdued and gentle to powerful and commanding. Speaking style can shift from casual conversation to formal presentation. Emphasis allows you to stress specific words or phrases for impact.

This level of control means you can fine-tune the delivery to match your exact needs. It's like having a voice actor who takes direction perfectly every single time, instantly adapting their performance to your specifications.

Best AI Voice Cloning Tools in 2025

Several companies have emerged as leaders in voice cloning technology. Each has strengths that make them better for different use cases.

ElevenLabs: The Industry Leader

ElevenLabs has become synonymous with high-quality voice cloning. Their technology produces remarkably natural-sounding voices with excellent emotional range. The platform supports multiple languages and dialects, making it perfect for global content creation.

What makes ElevenLabs stand out is their emotional control. You can adjust not just what the voice says, but how it says it, with granular control over delivery style. Content creators love it for YouTube videos, podcasts, and audiobooks. The quality is high enough that many listeners can't tell it's AI-generated.

PlayHT: Ultra-Realistic Voice Synthesis

PlayHT focuses on creating voices that sound indistinguishable from human recordings. Their platform offers fine-grained control over pronunciation, which is crucial for technical content, proper nouns, or specialized vocabulary. The system lets you create custom pronunciations for words, adjust speaking speed on a per-sentence basis, and control pauses and breathing sounds.

This makes PlayHT particularly popular with e-learning creators, corporate training developers, and anyone producing content where precise delivery matters.

Resemble AI: Developer-Friendly Voice API

While ElevenLabs and PlayHT focus on end-user platforms, Resemble AI targets developers who want to integrate voice synthesis into their applications. Their API allows real-time voice generation, making it perfect for interactive applications like video games, virtual assistants, or chatbots.

Resemble also offers voice conversion, where you can take existing audio and change it to sound like a different voice while maintaining the original timing and emotion. This is incredibly useful for content localization or dubbing.

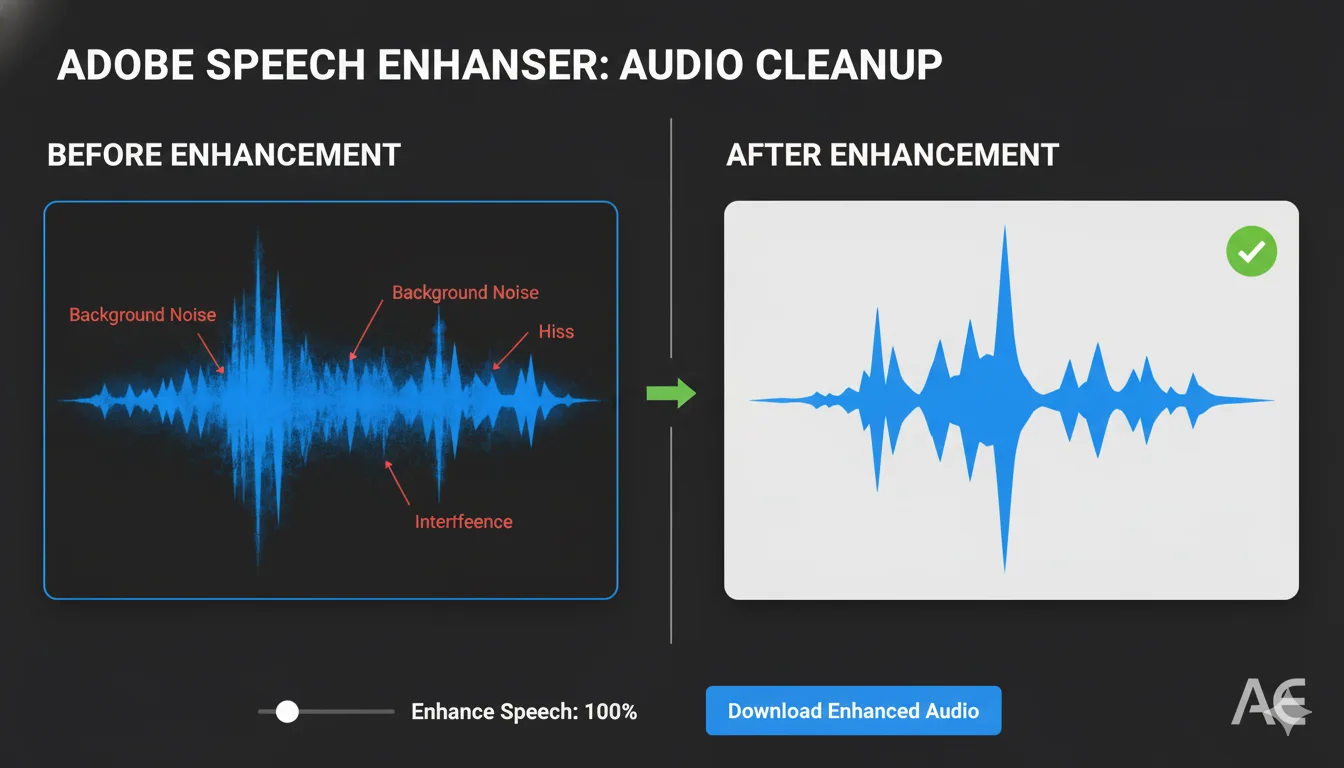

Adobe Speech Enhancer: Quality Improvement

While not strictly a voice cloning tool, Adobe's Speech Enhancer deserves mention. It uses AI to clean up poor quality audio, removing background noise, echo, and distortion. This is crucial for voice cloning because the quality of your input audio directly affects the quality of the clone.

Many voice cloning workflows now include a step where audio is first processed through Speech Enhancer or similar tools to ensure the cleanest possible input for training.

Real-World Applications of Voice Cloning Technology

Voice AI isn't just a cool technology demo, it's solving real problems and enabling new creative possibilities across industries.

Content Creation: Audiobooks and Podcasts

Creating audiobooks traditionally requires hiring a voice actor, booking studio time, and spending hours or days recording. Voice AI changes this completely. Authors can now create audiobook versions of their work in hours instead of weeks. Podcasters can maintain consistent audio quality even when recording conditions aren't perfect. Content creators can generate voiceovers for videos without recording each one manually.

This dramatically lowers the barrier to entry for audio content creation. Small creators can now produce professional-quality audio content without expensive equipment or hiring voice talent.

Accessibility and Assistive Technology

For people with speech disabilities or those who have lost their voice due to illness, voice cloning offers something incredibly valuable, the ability to preserve or restore their natural speaking voice. Patients facing voice-affecting surgeries can record their voice beforehand, then use the clone afterward. People with degenerative conditions can preserve their voice while they still have it.

This isn't just about communication, it's about maintaining identity and personal connection. Your voice is part of who you are, and voice AI can help preserve that.

Entertainment: Video Games and Animation

Video game developers use voice AI to create dialogue for NPCs (non-player characters) without recording every possible line. This enables more dynamic, responsive dialogue systems where characters can say contextually appropriate things in real-time. Animation studios can prototype dialogue and timing before booking expensive voice actor sessions.

Business: Customer Service and Training

Companies are using voice AI to create more natural-sounding virtual assistants and chatbots. Instead of robotic phone trees, customers can interact with AI that sounds genuinely human and helpful. Training materials can be voiced consistently across updates without re-recording everything. Multilingual support becomes more feasible when you can generate natural-sounding speech in dozens of languages.

Content Localization and Translation

Perhaps one of the most exciting applications is combining voice cloning with translation AI. Imagine watching a video where a Spanish speaker appears to be fluently speaking English, in their own voice. The technology can translate the words while maintaining the speaker's unique vocal characteristics, making international content feel more personal and authentic.

Ethical Considerations and Responsible Use

With great power comes great responsibility, and voice cloning is no exception. The same technology that enables amazing creative and accessibility applications can also be misused.

Consent and Authorization

The most fundamental ethical question is consent. You should never clone someone's voice without their explicit permission. Most reputable voice cloning platforms require users to confirm they have rights to use the voice they're cloning. Some even require uploading a consent statement read by the voice owner.

This isn't just about ethics, unauthorized voice cloning can have legal consequences. Voice can be considered a form of personal identity, and using someone's voice without permission could be seen as identity theft or fraud.

Deepfakes and Misinformation

Voice cloning technology can be used to create "deepfake" audio, recordings that sound like someone saying something they never actually said. This raises serious concerns about misinformation, fraud, and manipulation. Bad actors could potentially create fake audio of public figures making false statements or impersonate someone to commit fraud.

The industry is responding with detection technologies and watermarking systems to identify AI-generated audio. Many platforms also build in safeguards to prevent obvious misuse, though this remains an ongoing challenge.

Impact on Voice Actors

Professional voice actors understandably have concerns about AI potentially replacing human performers. While AI can generate speech efficiently, it currently lacks the creative interpretation and emotional depth that skilled voice actors bring to character work and storytelling.

Many in the industry see a hybrid future where AI handles routine voiceover work (like narrating technical documentation), while human voice actors focus on creative, interpretive performances that require genuine emotional intelligence and artistic choices.

Best Practices for Ethical Use

If you're using voice cloning technology, follow these guidelines: always obtain explicit consent before cloning someone's voice, clearly disclose when audio is AI-generated rather than authentic recordings, use the technology to enhance rather than deceive, respect voice actors' rights and compensation, and implement safeguards against fraudulent use.

Getting Started with Voice Cloning

Ready to try voice cloning yourself? Here's how to get started with good results.

Preparing Your Voice Sample

Quality input creates quality output. Record in a quiet environment with minimal background noise. Use a decent microphone, your phone is fine, but a USB microphone is better. Speak naturally at your normal pace and volume. Include varied sentences that demonstrate your vocal range. Aim for at least 5-10 minutes of clear audio.

The more natural and varied your sample, the better the AI can learn your voice's full characteristics.

Choosing the Right Tool

Consider what you need the voice for. For content creation and general use, ElevenLabs or PlayHT are excellent choices. For development and API integration, look at Resemble AI. For multilingual content, choose platforms with strong language support. Check pricing, some platforms charge per character, others per minute of audio generated.

Testing and Iteration

Your first clone might not be perfect. Test it with various types of content and adjust. Most platforms let you refine the clone or adjust generation settings. Experiment with emotion and pacing controls to get natural-sounding results.

The Future of Voice AI Technology

Voice cloning technology continues to evolve rapidly. We're moving toward even more natural synthesis, real-time voice conversion during live calls, better emotional range and expression, seamless language translation with voice preservation, and integration with video to create full audio-visual deepfakes.

Within a few years, distinguishing between AI-generated and human speech will become increasingly difficult. This makes understanding the technology, both its capabilities and limitations, more important than ever.

Final Thoughts

Voice cloning and AI sound design have transformed from science fiction to practical tools available to anyone. The technology enables incredible creative possibilities, improves accessibility, and streamlines content creation. At the same time, it requires responsible use and ethical consideration.

Whether you're a content creator looking to streamline production, a developer building voice-enabled applications, or someone curious about AI capabilities, understanding voice cloning technology prepares you for a future where synthetic speech becomes increasingly common. The key is using these powerful tools thoughtfully and responsibly, enhancing human creativity rather than replacing authentic human connection.

As voice AI continues to improve, the line between artificial and authentic will blur. But with proper understanding, ethical guidelines, and creative application, this technology can amplify human expression and make audio content creation accessible to everyone.