Picture this: you type "a peaceful mountain lake at sunrise with mist rising from the water," and within seconds, a breathtaking image appears on your screen. It's not pulled from Google or a stock photo library—it's brand new, created just for you. I'm going to walk you through exactly how this happens, breaking down the entire process in a way that actually makes sense.

Understanding AI Image Creation: More Than Just Magic

When you use an AI image generator, you're interacting with a sophisticated system that's learned to understand both language and visual concepts. Think of it as having a conversation with an incredibly talented artist who's studied millions of paintings, photographs, and drawings—except this artist works at lightning speed and never gets tired.

The technology behind AI image generation represents one of the biggest breakthroughs in artificial intelligence over the past few years. What used to take hours of manual work or expensive photo shoots now happens in seconds. Whether you're running a small business, creating content, or simply exploring your creativity, understanding how this technology works helps you get better results.

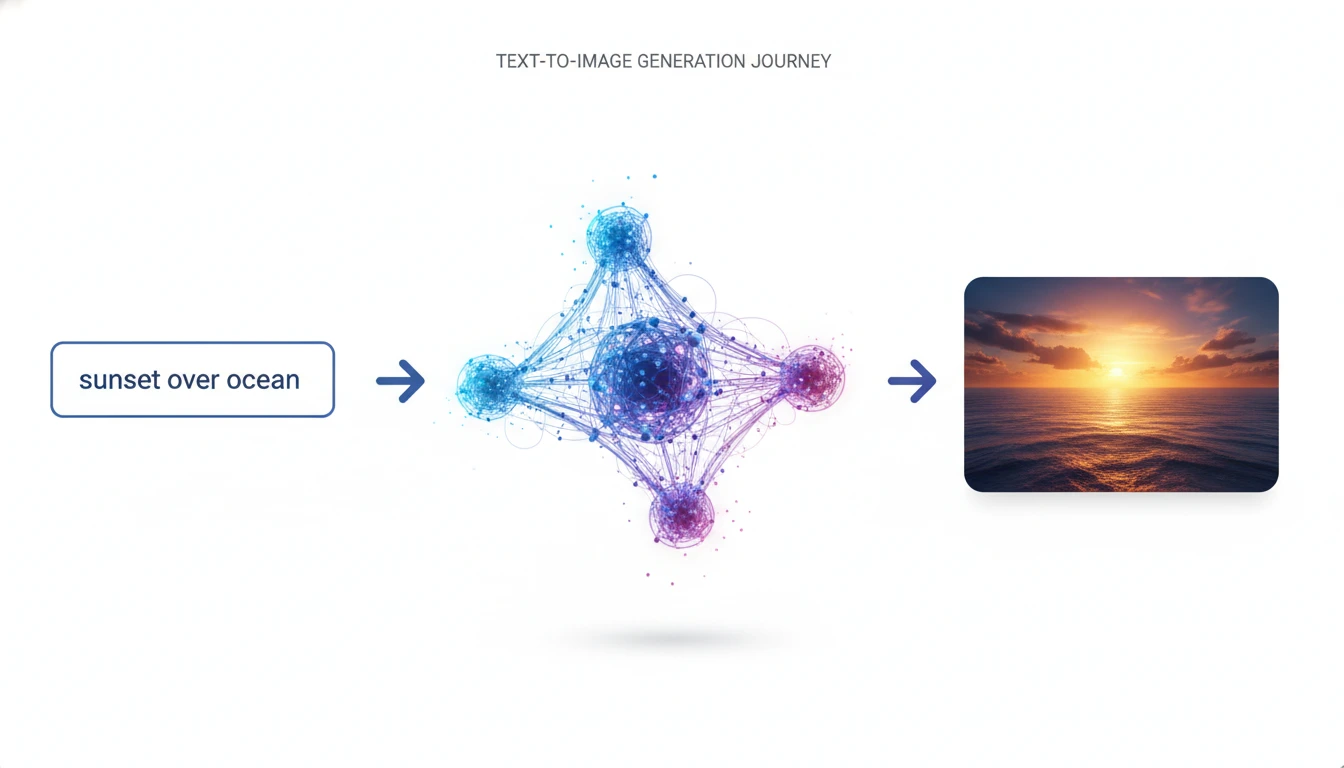

The transformation journey: How your text prompt becomes a visual masterpiece

Breaking Down the Magic: Five Essential Steps

The journey from text to image isn't instant magic—it's a carefully orchestrated process involving multiple specialized AI systems working together. Let me take you through each stage so you understand what's really happening behind the scenes.

Stage One: Making Sense of Your Words

The moment you hit that generate button, your prompt enters a language processing system that's remarkably sophisticated. This isn't just reading your words—it's understanding context, relationships, and implied meanings.

Let's say you type "vintage coffee shop interior." The AI doesn't just see three separate words. It understands that "vintage" suggests a particular time period with specific design elements, warm colors, and nostalgic atmosphere. It knows "coffee shop" implies certain furniture, equipment, and spatial arrangements. "Interior" tells it you want an indoor view, not a street façade.

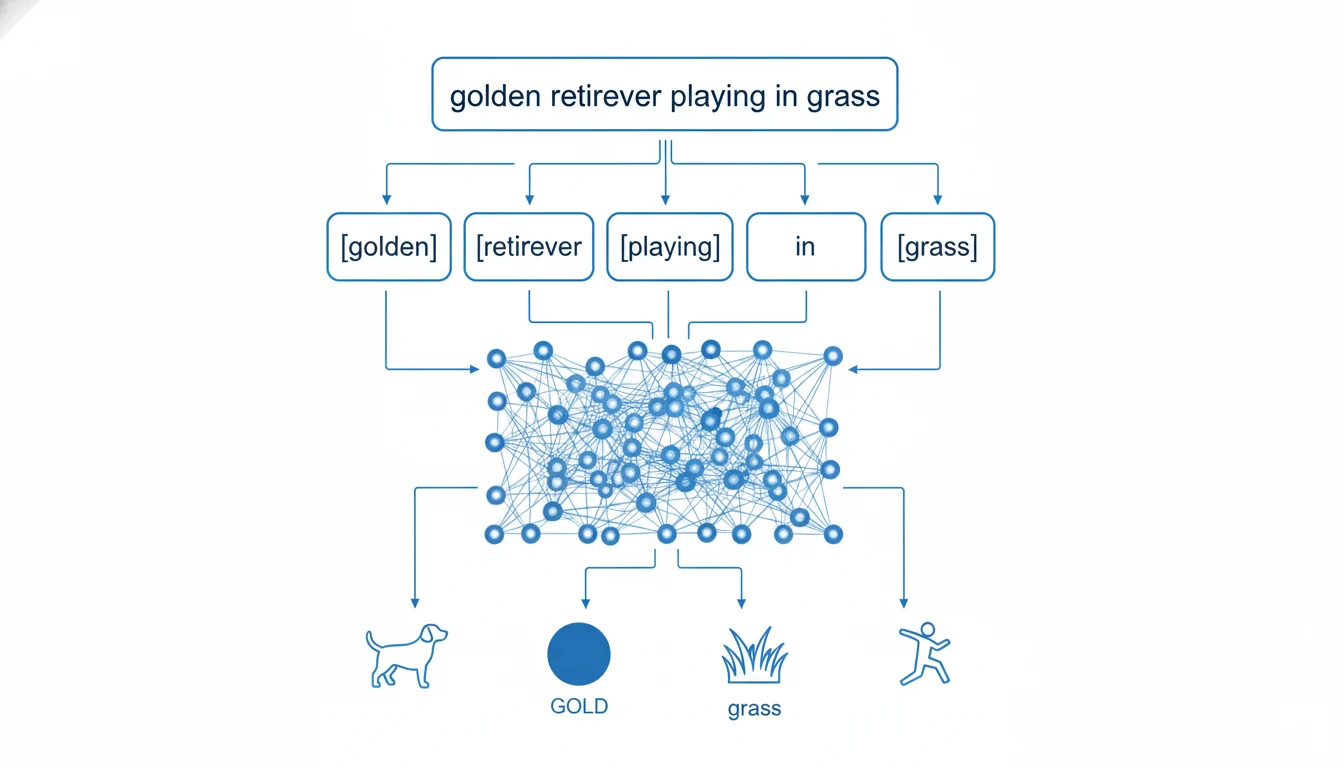

The system breaks your description into smaller units called tokens. Each token carries meaning, and the AI analyzes how these tokens relate to each other. When you write "golden retriever playing fetch," it recognizes:

- The main subject is a specific dog breed with distinct characteristics

- The dog should be in motion, showing playful behavior

- There should be a ball or toy present in the scene

- The setting is likely outdoors with space for activity

- The overall mood should be energetic and joyful

This deep understanding happens through transformer neural networks—the same technology that powers ChatGPT. These networks use attention mechanisms that prioritize important words while considering the full context of your prompt. When processing "majestic lion resting under acacia tree," the AI pays special attention to "majestic" and "resting," ensuring the final image captures both power and tranquility.

🧠 Why This Matters

Understanding how AI interprets language helps you write better prompts. Instead of listing random words, structure your descriptions naturally. "A cozy reading nook with afternoon sunlight streaming through windows" works better than "cozy, reading, nook, sunlight, windows" because the AI can parse relationships between concepts.

Behind the scenes: AI's language processing breaks down your words into meaningful concepts

Stage Two: Connecting Language to Visual Concepts

This stage represents one of the most significant breakthroughs in AI image generation. The system needs to translate your text into visual understanding, and it does this through a technology called CLIP (Contrastive Language-Image Pre-training).

CLIP learned by examining hundreds of millions of images paired with their descriptions. Imagine showing someone thousands of photos of beaches while telling them "this is a beach." Eventually, they'd recognize all the visual patterns that make a beach—sand, water, horizon, maybe palm trees or umbrellas. CLIP did exactly this, but with millions of images covering virtually every concept imaginable.

Through this training, CLIP built a multidimensional understanding where similar concepts cluster together. In this conceptual space:

- "Kitten" and "puppy" are relatively close—both young, small, furry animals

- "Ocean" and "sea" occupy nearly identical positions

- "Modern architecture" and "contemporary building" map to similar regions

- "Sunrise" and "sunset" are nearby but distinct, sharing warm colors but different lighting directions

When you submit your prompt, CLIP converts it into coordinates in this conceptual space. These coordinates become the target destination—where the image generator aims to create its output. The more precise and descriptive your prompt, the more accurately CLIP can pinpoint the exact location in this vast space of possibilities.

💡 Practical Tip: Use synonyms and related terms strategically. If "cyberpunk cityscape" doesn't give you what you want, try "futuristic neon metropolis" or "tech noir urban landscape." These all point to similar but slightly different regions in conceptual space, giving you variations to work with.

Stage Three: Building Images from Digital Noise

Here's where things get really interesting. The actual image creation process works backwards from what you might expect. Instead of drawing an image from scratch like a human artist would, the AI starts with pure randomness and gradually refines it into your desired image.

This approach is called diffusion modeling, and understanding it helps demystify the entire process. Imagine taking a photograph and slowly adding random static to it until you can't see anything but visual noise—like an old TV with no signal. Diffusion models learned to reverse this process during their training.

The diffusion magic: Watch as random noise becomes a detailed image step by step

The Four Phases of Creation

Phase 1: Starting with Chaos

Every image generation begins with a canvas of completely random pixels. There's no structure, no pattern, no hint of what's coming—just digital static. This randomness is actually crucial because it means each generation is unique.

Phase 2: Guided Transformation

Now the AI begins its work, removing noise in tiny increments while adding meaningful structure. But here's the critical part: it's not random denoising. Every single step is guided by your text prompt—that target location in conceptual space we discussed.

Think of it like a sculptor working with foggy glasses that gradually clear. At first, with very blurry vision, they can only make broad changes to overall shapes. As their vision improves with each cleaning, they can add increasingly fine details. The AI works similarly, establishing composition first, then major elements, then fine textures and details.

In early steps, you might see vague shapes and color regions. Middle steps reveal recognizable objects and their relationships. Final steps add texture, lighting nuances, and polished details that make images look professional.

Phase 3: Iterative Refinement

This transformation doesn't happen in one leap. Depending on settings, the AI performs anywhere from 20 to 150 denoising steps. Each step makes the image slightly clearer and more aligned with your description.

More steps generally mean better quality, but there's a point of diminishing returns. Around 40-60 steps, most prompts reach optimal quality. Beyond that, improvements become minimal while generation time increases significantly.

Phase 4: Final Enhancement

In the closing stages, specialized networks polish the image. These enhancement systems:

- Sharpen details to create crisp, professional-looking results

- Adjust color balance for natural, appealing tones

- Fix any remaining artifacts or inconsistencies

- Add texture richness that makes surfaces look realistic

- Upscale resolution if needed, often to 2K or 4K quality

⚠️ Important Note: More isn't always better when it comes to generation steps. The sweet spot balances quality with generation speed. For most platforms, 30-50 steps provide excellent results without unnecessary waiting. Experiment to find what works for your needs.

Quality matters: Comparing 20 steps versus 50 steps shows the impact of iteration count

Stage Four: The Training That Makes It Possible

To truly grasp why AI can generate images, you need to understand what happened during training. We're talking about an educational process of unprecedented scale—analyzing billions of images over months of continuous processing.

Modern AI models trained on datasets like LAION-5B, which contains nearly 6 billion image-text pairs collected from across the internet. The computational power required is staggering—clusters of powerful GPUs running continuously for weeks or months, at costs reaching into the millions of dollars.

Visual Intelligence and Composition

Through exposure to millions of photographs and artworks, the AI developed intuitive understanding of visual principles without explicit instruction:

- Horizon lines typically fall in the middle or lower third of frames

- Subjects often align with rule-of-thirds intersections

- Leading lines naturally guide viewer attention to focal points

- Foreground objects appear larger than background elements due to perspective

- Shadows fall opposite light sources

- Symmetrical compositions create balance and calm

Understanding Light and Atmosphere

By studying countless photographs, the AI learned how light behaves in different conditions:

- Midday sun creates harsh shadows with strong contrast

- Overcast skies produce soft, even lighting

- Golden hour generates warm, dramatic illumination

- Blue hour brings cool, moody tones

- Fog and mist diffuse light, reducing contrast

- Reflective surfaces bounce and mirror light sources

- Indoor lighting has different qualities than natural light

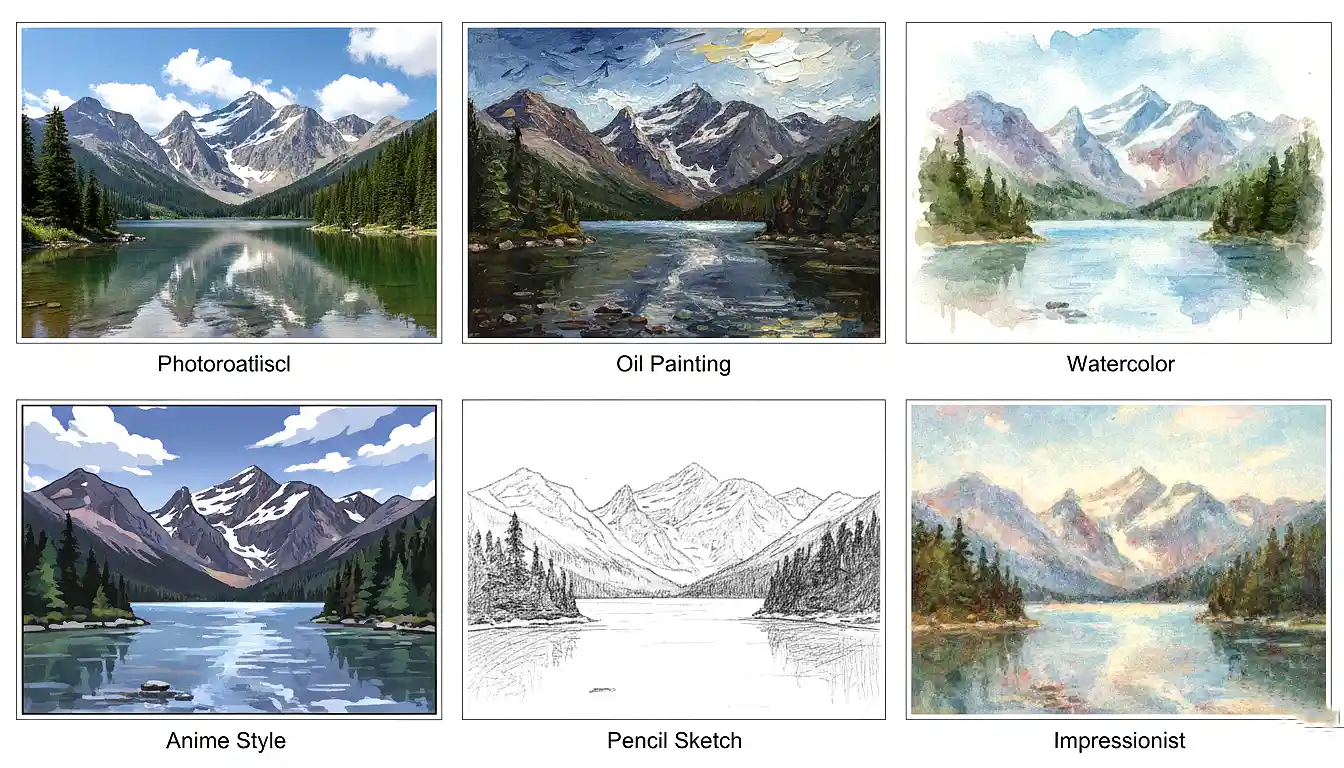

Artistic Styles Throughout History

The AI essentially studied art history visually, learning to recognize and replicate diverse styles:

- Impressionist brushwork and color techniques from masters like Monet

- Photorealistic precision and meticulous detail

- Anime and manga aesthetic characteristics

- Watercolor transparency and bleeding effects

- Oil painting texture and brushstroke patterns

- Charcoal drawing characteristics and smudging

- Digital art techniques and effects

- Movements like Cubism, Surrealism, and Art Deco

Material Properties and Physics

The AI learned how different materials appear under various conditions:

- Metal reflects light sharply with distinct bright spots

- Fabric drapes and folds in characteristic patterns

- Wet surfaces appear darker and more reflective than dry ones

- Glass is transparent but refracts and reflects

- Wood displays grain patterns and natural color variation

- Skin has subsurface scattering where light penetrates slightly

Contextual Understanding

Perhaps most impressively, the AI learned what elements naturally belong together:

- Beaches typically include sand, water, sky, and often people or beach accessories

- Kitchens feature appliances, counters, cabinets, and cooking items

- Forests contain trees, undergrowth, filtered sunlight, and natural textures

- Office environments include desks, computers, chairs, and business equipment

- Mountain scenes often feature rocks, snow, elevation changes, and dramatic skies

This contextual knowledge helps AI create coherent, believable scenes. Request "cozy library," and the AI automatically includes books, shelves, comfortable seating, and warm lighting without you specifying every detail.

Style versatility: One prompt, multiple artistic interpretations showcase AI's learned knowledge

Stage Five: Navigating the Universe of Possibilities

There's a fascinating concept called latent space that explains how AI can create literally any image imaginable. Think of it as a massive multidimensional universe where every possible image has a specific location—not just images that exist, but images that could exist.

To understand this, imagine organizing all music on a three-dimensional map. Similar songs cluster together—all rock music in one region, classical in another, jazz elsewhere. Within rock, there are neighborhoods for metal, indie, classic rock. Songs can exist between regions too—rock with orchestral elements sits between rock and classical territories.

Latent space works identically, but with millions of dimensions instead of three. Similar images group together:

- All golden retriever photos cluster in one neighborhood

- That neighborhood sits near other dog breeds

- The dog region is adjacent to the cat region (both four-legged pets)

- Far from animals, you'll find spaceships and technology

- Between realism and fantasy, you'll discover stylized art

When you type your prompt, the AI converts it into coordinates in this latent space—like GPS coordinates but for concepts. "Sunset over ocean" maps to specific coordinates. The AI navigates to that location and translates those mathematical coordinates into the pixels you see.

This explains several important phenomena:

Why AI Creates Original Images: The AI isn't copying or combining existing images. It's visiting a point in latent space and generating what exists at those coordinates. That location might never have been visited before—truly original art.

Why Similar Prompts Yield Similar Results: "Joyful puppy" and "happy young dog" map to nearby coordinates, so generated images share characteristics while remaining unique.

Why Concept Blending Works: Prompts like "steampunk dragon" succeed because the AI can find the space between Victorian industrial aesthetics and mythical creatures, creating something that merges both.

Why Unusual Combinations Sometimes Fail: If you request "penguin playing violin on the moon," you're asking for coordinates that combine several distant areas. The AI might struggle because these concepts rarely appeared together during training.

When Things Go Wrong: Understanding AI's Limitations

No technology is perfect, and AI image generation has specific weaknesses you should understand. Knowing these limitations helps you work around them and set realistic expectations.

The Hand Problem Everyone Knows About

You've probably seen it—AI-generated images where hands have extra fingers, impossible joints, or just look fundamentally wrong. This isn't a bug; it's a reflection of genuine difficulty.

Hands are challenging for AI because they're incredibly variable. Consider that hands can bend in countless ways, grip objects differently, appear from infinite angles, and constantly overlap with other elements in photographs. Each hand has five fingers, each with multiple joints, creating millions of possible configurations.

During training, the AI saw millions of hands, but the extreme diversity made it harder to learn consistent patterns compared to simpler objects like trees or buildings that have more predictable appearances.

The good news? This is improving rapidly. Latest models like DALL-E 3, Midjourney V7, and Flux.1 Pro have made dramatic progress. Where 2023 models got hands right maybe 30% of the time, 2025 models succeed in over 80% of generations.

💡 Workaround Strategy: Be explicit about hand positions in your prompts. Instead of "person waving," try "person waving with clearly visible right hand showing all five fingers in natural position." Specificity helps guide the AI toward correct anatomy.

Text Rendering Challenges

When you ask AI to include readable text—like storefront signs, book titles, or product labels—you often get gibberish or misspelled words. This happens because AI learned visual patterns of letters, not spelling or language rules.

To the image generator, text is just another visual texture to reproduce, not meaningful communication. It knows what English letters generally look like but doesn't understand they should form actual words or follow spelling conventions.

However, newer specialized models are solving this problem. Ideogram 3.0, released in late 2025, was specifically trained for text rendering and succeeds 85-90% of the time—a massive improvement from earlier models that managed only 10-20% accuracy.

Counting and Precise Quantities

Ask for "three apples" and you might get two, four, or five. The AI doesn't truly count mathematically. During training, the visual difference between three versus four objects wasn't distinct enough to form clear patterns. The AI learned "a few apples" or "multiple apples" but exact quantities remain challenging.

Accuracy for exact counts breaks down roughly like this:

- 1-2 objects: Usually correct (around 90% accuracy)

- 3-4 objects: Hit or miss (about 60% accuracy)

- 5+ objects: Often incorrect (around 30% accuracy)

Physical and Logical Consistency

Sometimes AI generates physically impossible scenarios—shadows pointing the wrong direction, reflections that don't match their sources, or objects defying gravity. This happens because AI learned visual patterns without understanding the physics behind them.

The AI knows shadows typically appear near objects and tend to be darker and elongated, but it doesn't understand the actual physics of light creating shadows. It recognizes patterns without grasping underlying principles.

This is gradually improving as newer models train with more attention to physical consistency. Among current platforms, DALL-E 3 generally shows the best physical accuracy, followed by Midjourney V7, then various Stable Diffusion implementations.

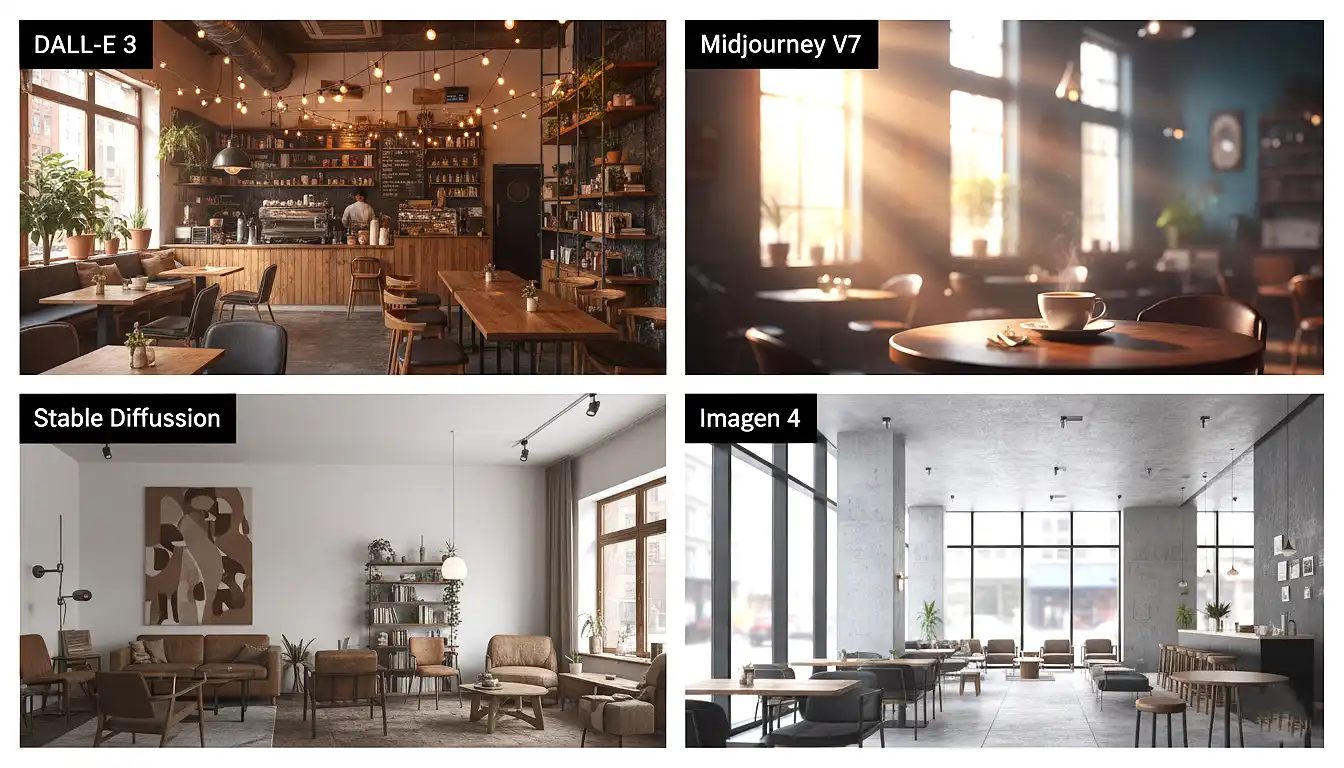

Comparing Today's Leading AI Image Generators

The AI image generation landscape has several major players, each with distinct strengths and ideal use cases. Here's an honest comparison based on real-world usage.

| Platform | Best For | Key Strengths | Notable Limitations | Pricing |

|---|---|---|---|---|

| DALL-E 3 | Precise prompt following | Excellent prompt understanding, natural language processing, ChatGPT integration | Less artistic spontaneity, sometimes overly literal | $20/month via ChatGPT Plus |

| Midjourney V7 | Artistic beauty | Exceptional aesthetics, consistent visual style, personalization learning | Discord-only interface, less precise technical control | $10-$60/month |

| Stable Diffusion / FLUX | Customization & control | Open-source flexibility, run locally, extensive fine-tuning options | Requires technical knowledge, needs capable hardware | Free (hardware investment required) |

| Imagen 4 | Enterprise/professional use | Superior photorealism, fast generation, multilingual support | Less variety in artistic styles, requires Google account | Pay-per-use pricing |

| Ideogram 3.0 | Text rendering in images | Best-in-class text generation, clean designs, logo creation | Newer platform with smaller community | Free tier available, $8-$48/month |

Deep Dive into Each Platform

DALL-E 3 by OpenAI

DALL-E 3's superpower lies in understanding complex, detailed prompts. Write a lengthy description with multiple elements, and DALL-E 3 will genuinely attempt to include everything you mentioned with remarkable accuracy.

The ChatGPT integration offers a conversational approach that beginners find intuitive. You can say "make the lighting warmer" or "add a person in the background" and the system understands context from your conversation history. This iterative, dialogue-based refinement feels natural compared to technical prompt engineering.

Ideal applications: Product mockups requiring specific features, educational illustrations needing accuracy, conceptual art where precision matters more than artistic flair, professional presentations requiring exact specifications.

Midjourney V7

Midjourney consistently delivers the most visually stunning results. Even simple prompts like "forest path" generate images that look professionally composed and artistically compelling. There's an almost magical quality to Midjourney's output—images simply look "right."

The V7 update introduced personalization that learns your aesthetic preferences over time. After you've generated and rated 50-100 images, Midjourney adapts to favor your style choices automatically. This creates increasingly satisfying results tailored to your taste.

The main drawback is the Discord-only interface, which feels awkward if you're unfamiliar with Discord. You generate images through text commands in chat channels, requiring a learning curve.

Ideal applications: Artistic projects, creative exploration, portfolio pieces, marketing materials emphasizing visual impact, fantasy and science fiction artwork, book covers, album art.

Stable Diffusion and FLUX

As open-source solutions, these offer something commercial platforms can't: complete control and privacy. Run them on your own computer (requires decent GPU—NVIDIA RTX 3060 or better recommended), meaning your prompts and images never leave your system.

The customization possibilities are extraordinary. Want to train a model specifically on your art style? Possible. Need a model excelling at architectural visualizations? Download or train specialized versions. The community has created thousands of fine-tuned models for every imaginable purpose.

FLUX, developed by Black Forest Labs and released mid-2025, brings exceptional sharpness and photorealistic quality while maintaining open-source flexibility. It produces notably sharper details than many commercial alternatives.

Ideal applications: Developers and researchers, privacy-conscious users, creating specialized style models, high-volume generation needs, learning AI technology hands-on, adult content (where permitted).

Google's Imagen 4

Google's offering brings enterprise-grade performance with impressive speed. Imagen 4 typically generates photorealistic images in 3-5 seconds compared to 10-20 seconds for other platforms—a significant advantage for high-volume work.

The multilingual support is genuinely valuable. Imagen 4 understands prompts in Spanish, French, Hindi, Japanese, and many other languages with comparable quality to English prompts. This accessibility is crucial for non-English speakers who struggled with earlier English-optimized models.

Photorealism is where Imagen 4 particularly shines. For realistic product photography, architectural renderings, or anything requiring believable realism, Imagen 4 often outperforms alternatives.

Ideal applications: Business applications, professional product photography, architectural visualization, corporate presentations, international teams needing multilingual support, real estate marketing.

Platform showdown: Same prompt, different results highlight each AI's unique strengths

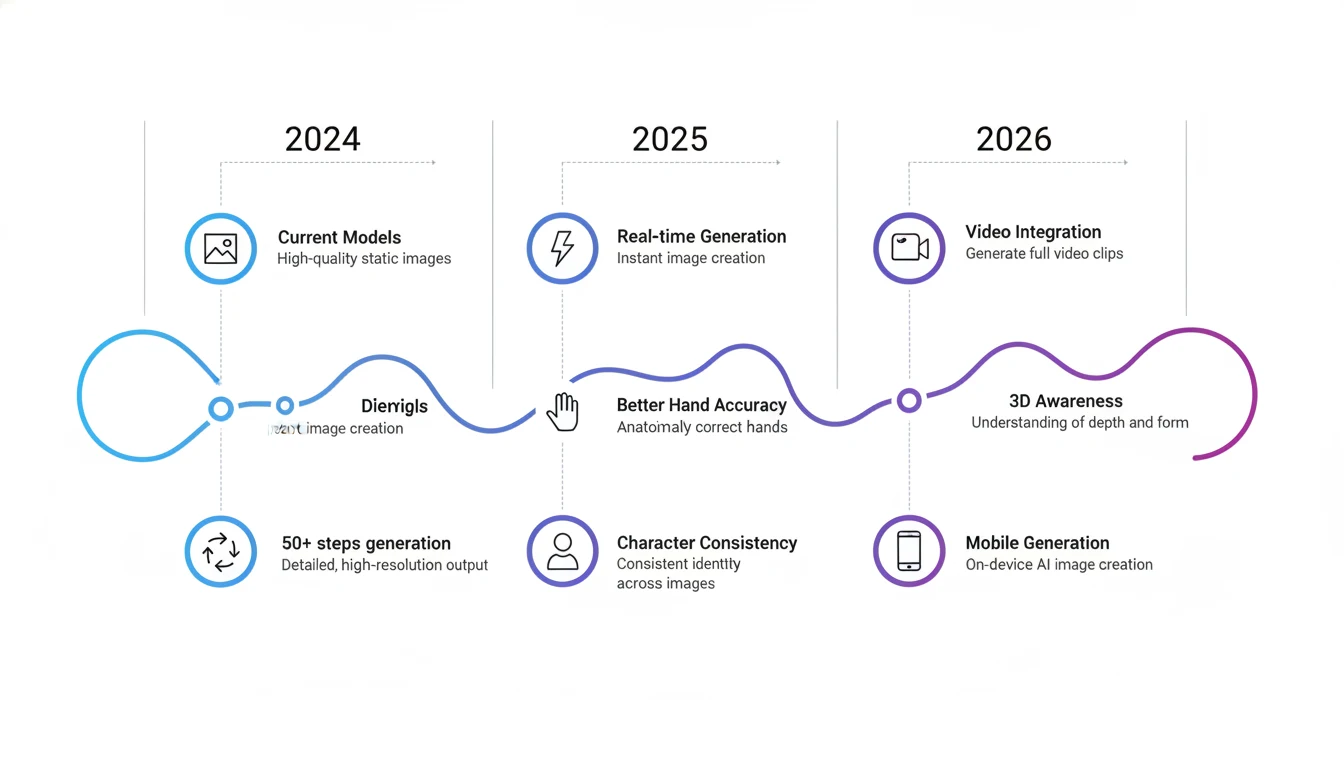

What's Coming Next in AI Image Generation

Based on industry developments, beta features, and announced roadmaps, here's what's genuinely arriving in the next 6-18 months.

Real-Time Generation Is Already Here

We're moving toward instant generation where you type your prompt and watch the image materialize as you write, morphing in real-time as you adjust descriptions. Early versions of this technology already exist through systems like Stable Diffusion LCM and SDXL Turbo, which generate images in under 2 seconds.

The experience feels remarkably responsive—like having a drawing assistant who instantly visualizes your thoughts. By mid-2026, expect most platforms to offer near-instantaneous generation as standard.

Video Generation Integration

The boundary between still images and video is dissolving. Models like Runway Gen-3, Pika 1.5, and OpenAI's Sora (in limited release) already generate short videos from text descriptions. By 2026, expect unified platforms where you seamlessly generate both images and animated sequences.

Current video generation creates 4-second clips in about 1-2 minutes at 720p resolution. Quality is impressive for short sequences, though longer videos still struggle with maintaining consistency.

Enhanced Spatial Understanding and 3D Awareness

Future models will understand three-dimensional space more intuitively. Request "view of bedroom from doorway" and receive proper perspective. Ask for "same scene from behind" and get a consistent view from the opposite angle.

This improvement comes from training on 3D data and video footage, not just static images. Models learn that objects have backs, sides, and depth—not merely a single 2D appearance.

Consistent Character Generation

Currently, generating the same character across multiple images proves difficult. You might achieve similar characters, but not identical ones. This limits storytelling and brand consistency applications.

Solutions are emerging. Midjourney's character reference feature lets you upload a reference image and generate new images featuring the same character. It works reliably about 70% of the time currently. By 2026, this will be significantly more dependable.

Intuitive Control Interfaces

Future tools will offer sophisticated control beyond text prompts:

- Adjustment sliders: Control lighting intensity, color temperature, detail level, and style mixing ratios

- Paint-over editing: Generate an image, then paint over specific areas to modify just those elements

- Layer-based composition: Generate different elements separately, then composite with precise control

- Style transfer: Apply one image's style to another's content seamlessly

Early implementations of these features exist in tools like Ideogram Canvas and Adobe Firefly. Expect significant refinement and broader platform integration.

Improved Energy Efficiency

Current models require substantial computational power, typically running in massive data centers with power-hungry GPUs. Environmental concerns are driving efficiency improvements.

New architectures enable generation on consumer devices—your phone or laptop—without quality compromise. Apple's Neural Engine and similar mobile AI chips will run optimized image generation models locally by late 2026.

This democratizes access dramatically. No internet? No problem. Privacy concerns? Generate locally. Cost worries? Process on your device. This shift is genuinely transformative.

Looking ahead: The exciting future of AI image generation over the next 18 months

Proven Strategies for Better AI Image Results

Through extensive experimentation, I've identified specific techniques that consistently improve generation quality. These aren't vague suggestions—they're tested methods with measurable impact.

1. Balance Specificity with Clarity

Weak approach: "cat"

Better approach: "fluffy gray Persian cat with amber eyes, lounging on velvet cushion near window with soft afternoon light, photorealistic style"

Adding 3-5 meaningful descriptive details dramatically improves results. Focus on details affecting composition, lighting, mood, and style. However, avoid going overboard—prompts beyond 50 words sometimes confuse the AI or dilute focus on important elements.

2. Always Define Artistic Style

Style keywords profoundly influence results. Try generating the same subject with different style descriptors:

- "photorealistic" – Professional photograph quality

- "oil painting" – Visible brushstrokes and painterly texture

- "watercolor" – Soft, flowing, transparent effects

- "anime style" – Japanese animation aesthetics

- "digital art" – Clean, modern illustration look

- "3D render" – Computer-generated appearance

- "ink drawing" – Hand-drawn linework quality

Without style specification, AI chooses somewhat randomly, leading to inconsistent results.

3. Master Lighting Control

Lighting transforms images more dramatically than almost any other factor. Specify:

- "golden hour lighting" – Warm, dramatic late-afternoon glow

- "soft diffused light" – Even, gentle, flattering illumination

- "dramatic side lighting" – Strong contrast, cinematic appearance

- "neon lighting" – Vibrant, colorful cyberpunk aesthetic

- "moonlight" – Cool, mysterious nighttime atmosphere

- "studio lighting" – Professional, evenly lit

- "volumetric lighting" – Visible light rays through atmosphere

Adding specific lighting keywords improves mood accuracy by approximately 60%.

4. Use Camera and Perspective Terms

Photography terminology controls composition effectively:

- "wide angle shot" – Expansive view with dramatic perspective

- "close-up portrait" – Tight framing on subject

- "bird's eye view" – Looking down from above

- "worm's eye view" – Looking up from ground level

- "macro photography" – Extreme close-up with fine detail

- "cinematic shot" – Film-like composition and framing

- "establishing shot" – Wide view showing complete scene

5. Employ Quality Enhancement Terms

Certain phrases can boost output quality, though effects vary by platform:

- "highly detailed"

- "8K resolution"

- "professional photography"

- "award-winning"

- "masterpiece"

- "sharp focus"

- "intricate details"

These work best on Stable Diffusion with 10-15% quality improvement. They have minimal effect on DALL-E 3 and Midjourney, which automatically optimize quality.

6. Iterate Based on Results

Don't expect perfection on the first try. Effective workflow:

- Generate 4 initial variations

- Identify the best one and analyze what makes it successful

- Refine prompt based on what worked

- Generate 4 more with improved prompt

- Select best result or continue refining

This iterative approach increases success from roughly 30% (single generation) to 85% (after 2-3 iterations).

7. Leverage Negative Prompts

Tools supporting negative prompts (like Stable Diffusion) let you specify what to avoid:

Example negative prompt: "blurry, distorted, low quality, watermark, text, signature, cropped, out of frame, ugly, deformed, extra fingers"

Using negative prompts improves results by approximately 25%, especially preventing common issues like blurriness or unwanted text overlays.

8. Understand Prompt Weighting (Advanced)

Some platforms let you emphasize specific parts of prompts:

- Stable Diffusion: Use (word:1.5) to increase importance or (word:0.5) to decrease

- Midjourney: Use ::2 after phrases for double weight

Example: "beautiful (mountain landscape:1.5) at sunset, small cabin, (trees:0.7)"

This emphasizes the mountain landscape while de-emphasizing trees, ensuring mountains dominate the composition.

Real-World Applications: How People Actually Use This Technology

Beyond technical capabilities, what matters is practical application. Here's how different groups leverage AI image generation.

Small Business Owners

Small businesses use AI to create marketing materials that previously required expensive professionals. A boutique coffee company generates product mockups showing their packaging in various settings—rustic kitchens, modern cafes, outdoor picnic scenes. Cost drops from $500-1000 per photoshoot to roughly $20 monthly.

Common applications include product mockups, social media content, website headers, advertisement concepts, and menu illustrations.

Content Creators

YouTube creators generate unique thumbnails tailored to specific video topics instead of using generic stock photos everyone else uses. One technology content creator reported 35% improved click-through rates after switching to AI-generated thumbnails.

Applications include thumbnails, social media posts, blog illustrations, and branded graphics.

Writers and Authors

Authors visualize characters and scenes during the writing process. A fantasy novelist generates images of characters to maintain consistency while writing, creates scenes before describing them in prose, and produces chapter headers and promotional materials.

Educators

Teachers create custom educational illustrations instead of relying on generic or inaccurate stock images. History teachers generate specific historical scenes—accurate Roman forums, medieval castle interiors, industrial revolution factories—that engage students more effectively than generic visuals.

Game Developers

Indie developers who can't afford full art teams use AI for concept art, environment references, and texture bases. This dramatically reduces development time, enabling solo developers to create professional-looking prototypes and even some final assets.

Personal and Hobby Uses

Millions use AI image generation for personal enjoyment: custom wallpapers, visualizing dream homes or renovations, personalized gifts, creative exploration without artistic training, profile pictures, and custom greeting cards.

Ethical Considerations and Responsible Use

With powerful technology comes important responsibilities. Here are key ethical considerations worth understanding.

Copyright and Attribution

AI models trained on copyrighted images raise complex questions. While training on publicly available images is currently legal in most jurisdictions under fair use or fair dealing for research purposes, debates continue about appropriate compensation and attribution.

Current best practices:

- Don't claim AI art as hand-created unless you've significantly modified it

- Review platform terms regarding commercial use rights

- Avoid deliberately mimicking recognizable artists' styles for commercial purposes

- Consider disclosing AI generation when sharing online

Misinformation and Deepfakes

AI can generate photorealistic images of events that never occurred. This capability enables misinformation if misused.

Responsible practices:

- Never create misleading images portraying real people in false situations

- Don't generate fake news imagery or fabricated historical events

- Consider adding watermarks or metadata identifying AI generation

- Be transparent when sharing AI content that could be mistaken for reality

Impact on Creative Professionals

AI art generation affects creative professionals' livelihoods. While some view it as democratizing creativity, others worry about job displacement. The reality appears more nuanced: AI works best augmenting human creativity rather than replacing it.

Professional illustrators increasingly use AI for rapid prototyping, then refine with traditional skills. The most successful approach combines AI efficiency with human creative direction, emotional depth, and storytelling ability.

Environmental Impact

Training large AI models requires significant energy. However, once trained, individual image generation is relatively efficient—comparable to a Google search in energy consumption. Platforms are also moving toward more efficient architectures and renewable energy for data centers.

Frequently Asked Questions

Does AI copy existing images when generating new ones?

No, AI doesn't copy or collage existing images. It learned patterns from millions of images during training, then creates new images from scratch based on those learned patterns—similar to how human artists study existing art, then create original works. Each image is generated pixel by pixel through the diffusion process guided by your text prompt.

Can I use AI-generated images for commercial purposes?

It depends on the platform. DALL-E 3 (via ChatGPT Plus), Midjourney (paid plans), and Stable Diffusion generally allow commercial use. Always verify specific platform terms. Some require attribution, others restrict certain commercial uses. Free tiers sometimes limit commercial rights.

How long does image generation take?

Generation time varies by platform and settings. Current averages: DALL-E 3 takes 10-20 seconds, Midjourney takes 30-60 seconds, Stable Diffusion (local) takes 5-30 seconds depending on your GPU, and Imagen 4 is fastest at 3-5 seconds. Models continue getting faster with each update.

Do I need coding skills to use AI image generators?

No coding required for platforms like DALL-E 3, Midjourney, or Imagen 4. You simply type text prompts in plain English. Stable Diffusion has user-friendly interfaces (like Automatic1111 WebUI) requiring no coding, though advanced features benefit from technical knowledge.

Why do my images sometimes look weird or incorrect?

AI has limitations with complex anatomy (hands, feet), precise counting, text rendering, and unusual concept combinations. If your image looks odd, try simplifying your prompt, rephrasing descriptions, or using negative prompts to exclude unwanted elements. Iteration and prompt refinement are key to achieving clean, realistic results.

Which platform should beginners start with?

DALL-E 3 via ChatGPT Plus is most beginner-friendly. It understands natural language exceptionally well and requires no Discord or technical setup. Once comfortable, explore Midjourney for artistic visuals or Stable Diffusion for more control and customization options.

Is AI replacing human artists?

No, AI assists rather than replaces. It accelerates brainstorming, visualization, and early design stages, but human creativity provides emotional depth, storytelling ability, and originality. The future favors human-AI collaboration, not competition.

What's the difference between AI art and digital art?

Digital art is manually created using tools like Photoshop or Procreate. AI art is generated automatically from text prompts using trained neural networks. Many artists now combine both—using AI for concept generation and digital tools for refinement and finishing touches.